Gemini 1.5 Flash

A powerful but lightweight AI model from Google

| About | Details |

|---|---|

| Name: | Gemini 1.5 Flash |

| Submited By: | Trenton Spencer |

| Release Date | 1 year ago |

| Website | Visit Website |

| Category |

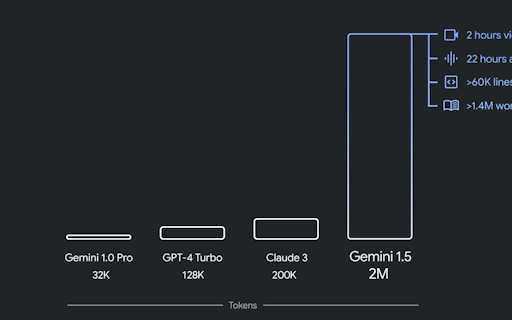

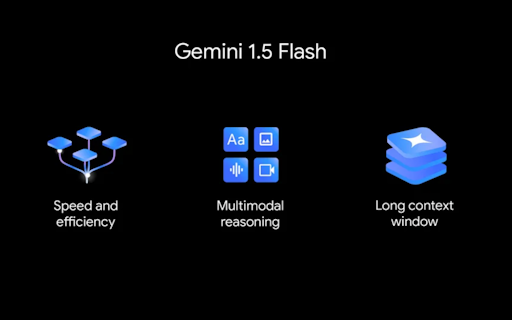

1.5 Flash is the newest addition to the Gemini model family and the fastest Gemini model served in the API. It’s optimized for high-volume, high-frequency tasks at scale, is more cost-efficient to serve and features our breakthrough long context window.

Its OpenAI and Google now. Every other foundational model is window dressing this week. I have ignored Google, Bard, and Gemini during their lifecycle, but the rate at which they are course-correcting is impressive. I look forward to building some weekend stuff with Geminia and testing it out soon.

1 year ago

Really excited to build with @Gemini-6 1.5 Flash API! Gemini 1.5 is my preferred LLM API over GPT-4 Turbo bc its about twice as fast and more robust at multi-modal tasks (aka recognizing what's in an image).

1 year ago

Absolutely hit the upvote, the idea that we can process big tasks without a big footprint is pretty slick/ Just how long is this 'long context window' we're talking about? And how does it balance speed and accuracy?

1 year ago

How is Gemini 1.5 flash in comparison to GTP 4o? I felt 1.5 flash is faster, but 4o is more accurate with results, especially with analytical tasks.

2 years ago