Grok-1

Open source release of xAI's LLM

| About | Details |

|---|---|

| Name: | Grok-1 |

| Submited By: | Paul Kshlerin |

| Release Date | 1 year ago |

| Website | Visit Website |

| Category | Twitter GitHub |

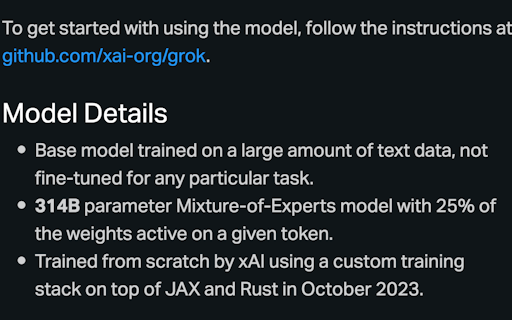

This is the base model weights and network architecture of Grok-1, xAI's large language model. Grok-1 is a 314 billion parameter Mixture-of-Experts model trained from scratch by xAI.

I like to see a smaller version of this LLM that use less memory; not everyone can afford this huge memory GPU machine to run it

1 year ago

Great find! I appreciate that they've open-sourced it, although the rationale behind doing so remains unclear. Nevertheless, it's fantastic to witness models of this magnitude being made available to the public. I'm curious to see what people will create with it!

1 year ago

Great hunt! I like that they open sourced it but not the reason behind open sourcing it. Outside of that, awesome to see models of this size being thrown in the public. Interested to see what people will do with it!

1 year ago

We'll be early today to get the stock fully but yes I guess you didn't too....

1 year ago

This is huge. Why doesn't Grok-1 get enough votes to rank on the top? It's at least near GPT-4 level if not exceeding it.

1 year ago

The open-source release of Grok-1, xAI's LLM is a boon for those interested in advanced prediction and decision-making AI models. Despite its hardware demands (like multiple H200 GPUs), this unlocks access for experimentation and the creation of groundbreaking applications.

2 years ago