LLaMA

A foundational, 65-billion-parameter large language model

| About | Details |

|---|---|

| Name: | LLaMA |

| Submited By: | Jaylon Deckow |

| Release Date | 2 years ago |

| Website | Visit Website |

| Category | Open Source GitHub |

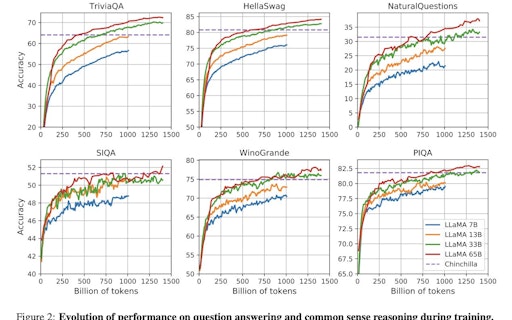

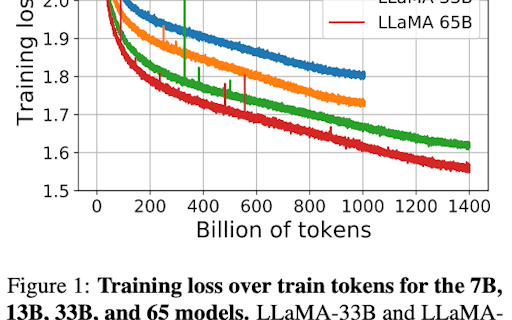

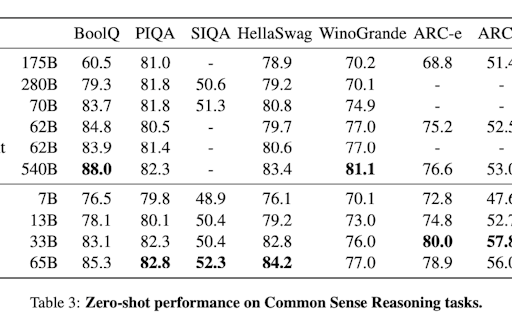

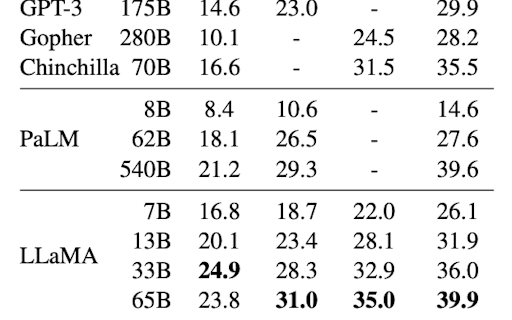

LLaMA is a collection of foundation language models ranging from 7B to 65B parameters and show that it is possible to train state-of-the-art models using publicly available datasets exclusively, without resorting to proprietary and inaccessible datasets.

What I love about LLaMA is how it opens up new possibilities for natural language processing research and applications. By leveraging publicly available datasets, LLaMA provides a powerful framework for building language models that can understand and generate natural language with remarkable accuracy and fluency.

1 year ago

LLaMA is a testament to the power of collaboration and open research. I hope that this project inspires more researchers to adopt an open science approach.

1 year ago

This is a really good research output for the community 👍 I have been waiting for an open-source model to investigate further the direction transformer based language models are going towards. Given their costly nature to train, to share pre-trained networks from big tech companies like Meta is a really good boost. I'd also appreciate and highlight the concerns of releasing these models out into the world because of the many malicious use cases ranging from generating fake news to propaganda. Time will only tell how we interact with these models 🤖

1 year ago