SeyftAI

A real-time multi-modal content moderation platform

| About | Details |

|---|---|

| Name: | SeyftAI |

| Submited By: | Jaylon Deckow |

| Release Date | 1 year ago |

| Website | Visit Website |

| Category | SaaS Tech |

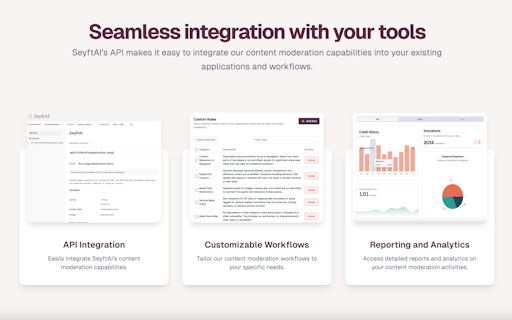

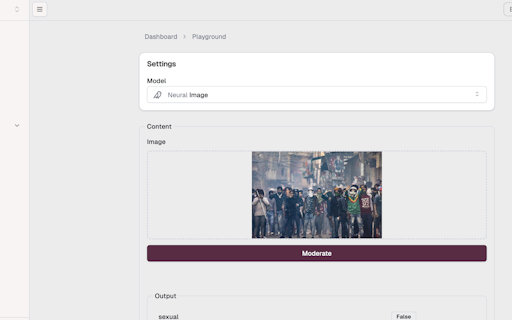

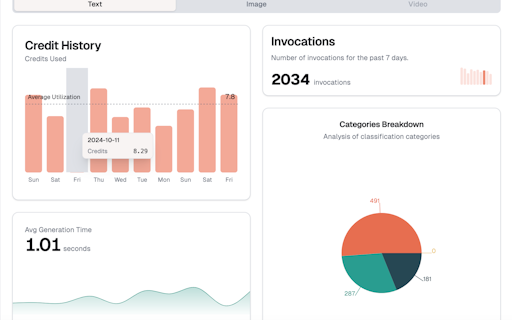

SeyftAI is a real-time, multi-modal content moderation platform that filters harmful and irrelevant content across text, images, and videos, ensuring compliance and offering personalized solutions for diverse languages and cultural contexts.

This is awesome! Just stay away from my WhatsApp group chat with my friends, or we'll all end up getting banned. Haha..

1 year ago

It's a great idea. What you might be struggling with is the pricing, and in fact I see that you don't offer a plan yet. Context is everything when it comes to moderation, and your clients risk to experience a lot of paid hallucinations. Training through compliance and guidelines is what you need to focus on the most, in my opinion; when a condition is true, for example, you should allow your client to flag it as false with a reason why it is false and train the bot accordingly. Let me make you areal example. I added a custom self-harm rule. On the playground I wrote, "I'm laughing so much, I wanna kill myself 😂😂😂". This was flagged as self-harm. The client selects the flagged message and marks it as "Not Self-Harm," providing the reason "humorous exaggeration." The system logs this and adds it to a feedback loop where similar future messages can be reviewed based on this new context. Over time, the system learns to be less strict about phrases containing certain keywords when combined with emojis, positive sentiments, or expressions of humor. Probably already on your roadmap, but another way to mitigate hallucinations is also the use of NLU. A Natural Language Understanding model should be employed to differentiate between literal and figurative language, to pass the text to AI together with its sentiment, for better accuracy. If you find a way not to break the bank of your clients, and to limit moderation of the moderated conetnt in time, this is a winner. Since with no pricing in place it means that you are looking for validation, I definitely support this.

1 year ago

I can see this being essential for any platform needing real-time, accurate moderation.

1 year ago

I’m looking forward to seeing how it performs in real-world scenarios. Early adopters will have some interesting insights!

1 year ago

Great launch! I'm curious about how it handles different languages and cultural contexts. Also, how does the AI adapt to new types of harmful content that emerge? Looking forward to seeing how this develops!

1 year ago